On the ProjectVRM list, John Wunderlich shared a find that makes clear how advanced and widespread AI-based shopping recommendation has gone so far (and not just with ChatGPT and Amazon). Here it is: Envisioning Recommendations on an LLM-Based Agent Platform: Can LLM-based agents take recommender systems to the next level?

It’s by Jizhi Zhang, Keqin Bao, Wenjie Wang, Yang Zhang, Wentao Shi, Wanhong Xu, Fuli Feng, and Tat-Seng Chua* and is published in the Artificial Intelligence and Machine Learning section of Research and Advances in Communications of the ACM. So it’s serious stuff.

Illustration of the Rec4Agentverse. The left side depicts three roles in the RecAgentverse: the user, the Agent Recommender, and Item Agents, along with their interconnected relationships. In contrast to traditional recommender systems, the Rec4Agentverse has more intimate relationships among the three roles. For instance, there are multi-round interactions between 1) users and Item Agents and 2) the Agent Recommender and Item Agents. The right side demonstrates how the Agent Recommender can collaborate with Item Agents to affect the information flow of users and offer personalized information services.

- Who wrote the CACM piece

- The state of recommendation science

- Who is working on personal AI

- How one can get started

- Forms of personal data to manage

There’s a lot here. The meat of it, for ProjectVRM purposes, starts in section 3. (The first two are more about what we’re up against.) Please read the whole thing and help us think through where to go with the challenge facing us. As we see in section 3, we do have some stuff on our side.

🧑💼 Jizhi Zhang

Affiliation: University of Science and Technology of China (USTC), Data Science Lab (inferred from USTC page) cacm.acm.org+14data-science.ustc.edu.cn+14x.com+14.

Research Interests: Recommendation systems, LLM-based agent platforms, and interactive intelligent systems—his lead authorship and continued work on Prospect Personalized Recommendation on LLM-Based Agent Platform (arXiv Feb 2024) reinforce this arxiv.org.

🧑🔬 Keqin Bao, Wenjie Wang, Yang Zhang, Wentao Shi, Fuli Feng, Tat‑Seng Chua

Affiliations: While CACM doesn’t list this, Jizhi Zhang and Fuli Feng are part of USTC’s Data Science Lab; Tat‑Seng Chua is a distinguished researcher from the National University of Singapore known for multimedia and information retrieval.

Expertise: A mix of recommender system design, LLM-agent evaluation, multimedia analysis, and human-AI interaction—reflecting a blend of USTC’s strengths and CACM relevance data-science.ustc.edu.cn+8cacm.acm.org+8data-science.ustc.edu.cn+8linkedin.com+4arxiv.org+4researchgate.net+4.

🧑🎓 Wanhong Xu

Affiliation: USTC Data Science Lab (USTC LDS), contributor to the same article .

Role: Emerging researcher whose latest publication is this Rec4Agentverse article, indicating an early-career focus in LLM-based recommender systems linkedin.com+8data-science.ustc.edu.cn+8linkedin.com+8.

🧠 Broader Context & Contributions

The group operates at the intersection of Large Language Models, agent-oriented systems, and recommender systems, pioneering a Rec4Agentverse paradigm that treats LLM-powered agents as “recommendable” items collaborating with one another—an evolution of traditional recommendation frameworkscacm.acm.org+5cacm.acm.org+5cacm.acm.org+5.

Their work continues earlier research (e.g., Prospect Personalized Recommendation… in Feb 2024), solidifying their ongoing commitment to expanding intelligent, conversational recommendation platforms arxiv.org.

🧭 Why This Matters

Academic Thought Leadership: Jizhi Zhang and colleagues are pushing forward thinking about how recommendations can evolve when agents—not passive items—are central.

Institutional Backing: All deeply connected to USTC’s Data Science Lab, underscoring strong Chinese academic investment in AI-driven recommender innovation.

Collaborative Reach: Including scholars like Tat‑Seng Chua broadens the research to include international perspectives in multimedia and retrieval systems.

In summary: This team is a multidisciplinary cohort—centered at USTC with international collaboration—who are pioneering the idea that LLM agents can become interactive, dynamic items in recommender systems. Their work positions them at the forefront of intelligent agent systems and could reshape how platforms personalize experiences in the future.

If you’re curious about any individual’s past publications, citation impact, or related work (e.g., on Agent4Rec), I’d be happy to dive deeper!

3. The state of recommendation science

Me:

How large is the emerging science, within the AI field, of recommendations?

ChatGPT:

The science of recommendations, particularly within the AI field, is not just emerging—it’s a massive, rapidly evolving subfield that is both academically rich and commercially dominant. That said, it’s also entering a new phase, driven by the rise of large language models (LLMs), interactive agents, and multimodal platforms.

📚 Academic Scope

1. Established Field: Recommender Systems

Recommender systems (RecSys) are a well-developed domain within AI and machine learning, with roots in the 1990s.

Annual conferences like:

ACM RecSys (since 2007)

SIGIR, KDD, WSDM, and NeurIPS regularly feature recommendation research.

Thousands of papers annually on topics like:

Collaborative filtering

Matrix/tensor factorization

Deep learning for recommendation

Fairness, transparency, and explainability in RecSys

Reinforcement learning and bandit algorithms for personalization🔎 As of 2024, over 60,000 papers in academic databases (e.g., Google Scholar) are tagged or indexed under “recommender systems”.

📈 Current Trends & Expanding Frontiers

2. LLMs + Recommendation = New Subfield

A major shift is underway: integrating Large Language Models (LLMs) into recommendation systems.

These go beyond “you may also like” to:

Use natural language context and dialogue

Generate explanations, not just rankings

Support conversational recommendation

Coordinate agent ecosystems, e.g., multi-agent reasoning environmentsExample paradigms:

RecMind – reasoning-aware recommendation agents

Rec4Agentverse – treating agents as recommendable entities

ChatRecs – integrating LLMs into live user conversations

3. Multimodal & Multitask Integration

Systems increasingly blend text, image, video, behavior, and user feedback.

LLMs allow richer personalization using complex user intent derived from unstructured data.

4. Industry Investment

FAANG companies (especially YouTube, Netflix, Amazon, TikTok, and Spotify) base their core user experience on AI-driven recommendation.

Billions of dollars are spent optimizing:

Ranking quality

Engagement prediction

Real-time inference

Federated and privacy-preserving personalization

🧭 Estimate of Scope

Area

Estimate (2024–25)

📄 Academic papers (RecSys + AI)

60,000+ overall; thousands per year

📚 Top conferences with recommender tracks

20+ (ACM RecSys, SIGIR, KDD, NeurIPS, etc.)

👩💼 Active researchers

Tens of thousands globally

🧠 Emerging subfields

LLM-based Rec, conversational Rec, agent-based Rec, explainable Rec

💰 Industry scale

$10B+ annually invested in personalization tech

🧠 In Summary

The science of recommendation is:

Not new, but rapidly evolving

Core to AI applications, especially with LLMs and agents

One of the most commercially and socially impactful domains in modern AI

Growing into new subfields (e.g. Rec4Agentverse, LLM Rec, multi-agent Rec)Let me know if you’d like a visual map of how the recommendation science ecosystem is structured—or who the top authors and institutions are in this space

That’s an essential and forward-looking question—especially as most “AI for you” today really means “AI about you, for someone else.”

While Big Tech firms dominate personalized AI, a growing ecosystem is focused on truly personal AI—that is, AI you own, that works for you (not advertisers or platforms), and that enhances your agency in the marketplace and your life.

Here are the most notable people, projects, and initiatives advancing truly personal AI today:

🧠 1. Kwaai and pAI-OS (South Africa / Global)

Kwaai is building an open-source, user-sovereign personal AI OS called pAI-OS.

Designed to run locally or at user discretion, with full data ownership, modular agent systems, and plugin capability.

Aligns with IEEE P7012 (“MyTerms”) privacy standards (see below).

Actively exploring intentcasting, customer signaling, and VRM (Vendor Relationship Management).

Site: https://kwaai.ai

🛠️ 2. Indie AI and Personal Agent projects

Several smaller open-source initiatives are trying to build personal agent toolkits that you can host and configure:

Examples:

AutoGen Studio (Microsoft) – allows building agent workflows, some of which can be user-defined. But it’s not yet fully personal or private.

Langroid – open-source framework for local agent-based LLM systems. Designed for extensibility, can be made personal with effort.

OpenDevin, Superagent, Auto-GPT – can be self-hosted, though most are still tool-centric rather than user-centric.

Personal.ai – A commercial startup that says it is personal, but uses centralized storage and fine-tuning, so actual user control is limited.🔍 Reality check: Most of these still rely on external LLMs (like OpenAI or Anthropic), even if they claim to be “your AI”.

🌐 3. Solid Project (by Tim Berners-Lee)

While not AI per se, Solid is a decentralized identity and data storage architecture that allows individuals to store their own data in personal data pods.

AI tools can be layered on top to access only the data you choose to share.

Foundation for personal AI assistants that can work with your data, not on others’ clouds.

https://solidproject.org

📜 4. IEEE P7012 – MyTerms

A standard being developed to flip the notice-and-consent regime—so individuals, not companies, dictate the terms of data use.

Backed by Customer Commons and designed to support intentcasting and personal marketplace signaling.

This enables a personal AI to serve as your agent, enforcing your terms and engaging the marketplace on your behalf.

🧩 5. Project VRM (Vendor Relationship Management)

Initiated by Doc Searls to empower customers with tools to manage vendors, not just the reverse.

Envisions personal tools (including AI) to:

Signal intent

Manage service contracts

Retain records of offers, purchases, warranties

Avoid manipulation, surveillance, and lock-inProject VRM is not a software project per se, but a framework guiding developers to build personal tools that support individual autonomy.

🔒 6. Open-source LLM stacks for local use

Several local LLM projects can be combined with personal data layers to build truly personal AI:

LM Studio – runs local models on desktop

PrivateGPT – local inference + local documents

Ollama – macOS-native interface for fast-running models like LLaMA, Mistral

LangChain / LlamaIndex – frameworks for building RAG systems with local documentsThese aren’t personal AI systems yet—but they are key ingredients.

🧭 Summary

Name / Project

What They Do

Status

Kwaai / pAI-OS

Open, personal AI OS and agent platform

Early but active

Solid

Personal data control layer

Stable; in use

IEEE P7012

Standards for user-enforced data terms

In development

Project VRM

Framework for tools that empower buyers

Thought leadership; toolkits emerging

Local AI frameworks

Tech foundations for personal AI

Available, DIY-friendly

🚧 The Gap

Despite this promising landscape, no major platform today delivers a fully integrated, easy-to-use personal AI that you own, configure, and direct—yet. But:

A personal AI revolution is underway, led by privacy advocates, open-source communities, and a few visionary developers—not Big Tech.

Would you like help putting together a DIY stack for your own personal AI toolkit based on this landscape?

Absolutely. Here’s a breakdown of key people involved in the personal AI movement, followed by a practical DIY stack you can start building today.

👤 People Leading or Advancing the Personal AI Revolution

These individuals aren’t all working on the same projects, but they’re aligned in trying to build AI that’s owned, run, and controlled by the individual—not centralized platforms.

🧑🔬 Tim Berners-Lee

Project: Solid (stewarded by ODI, commercially implemented by companies including TBLs Inrupt)

Focus: Decentralized web and personal data ownership via data pods

Impact: Infrastructure for privacy-respecting personal AI agents

https://solidproject.org

👤 Doc Searls

Project: Project VRM, Customer Commons, IEEE P7012

Focus: Customer empowerment, intentcasting, reversing surveillance capitalism

Impact: Philosophy and standards for customer-directed personal AI

https://projectvrm.org

🧠 Tawanda Kembo & Kwaai team

Project: pAI-OS (personal AI operating system)

Focus: Agent-centric open-source operating system for personal AI

Impact: One of the few efforts aiming to create a complete personal AI stack

https://kwaai.ai

🧑💻 Simon Willison

Project: Datasette, open tools for data management

Focus: Personal data control, lightweight databases, LLM + local tooling

Impact: Key figure in the open data + local AI tooling space

https://simonwillison.net

🤖 Brian Roemmele

Project: Multiplex (personal voice AI)

Focus: Voice-first personal assistants you run locally

Impact: Vocal advocate for true personal AI, often referencing “software you own”

🛠️ A DIY Personal AI Stack (2025 Edition)

This stack assumes local-first, modular, privacy-respecting tools. You can mix and match based on your needs.

🧩 Core Components

Layer

Tools / Projects

Notes

1. OS Layer

Linux, pAI-OS (in dev)

Linux gives flexibility; pAI-OS aims to be a full personal AI OS

2. Local LLM Inference

Ollama, LM Studio, PrivateGPT, LocalAI

Run models like LLaMA 3, Mistral, Phi-3 entirely offline

3. Vector Database

Chroma, Weaviate, Milvus, or SQLite with FTS5

Store and retrieve embedded text data

4. Personal Indexing

LlamaIndex, LangChain, AutoGen, GPTPilot

Feed personal documents and data to your LLM

5. Personal Data Storage

Solid POD, Datasette, Obsidian

Your life’s data, structured and queryable

6. Personal Agent Framework

Superagent, CrewAI, Langroid, AutoGen

Design agents that act on your behalf, modular and scriptable

7. Front-end Interface

Gradio, Streamlit, Tauri app, Electron

Local GUI/UX to interact with your agent

8. Voice Interface (optional)

OpenVoice, Vosk, Whisper.cpp, Coqui TTS

Build voice-in/voice-out agent on your machine

🧠 Optional Add-ons

Offline search: Chat over your files, PDFs, photos, and emails with LlamaIndex + PrivateGPT

Calendar/notes: Sync with Obsidian or local markdown-based knowledge base

Browser agent: Auto-control browser via Puppeteer/Playwright for marketplace navigation

🧭 What’s Still Missing?

True personal OS integration (like what pAI-OS is trying to do)

Seamless data portability and contract enforcement (IEEE P7012 is working on it)

Trusted personal AI app ecosystem (some apps, like Beeper or Obsidian, are getting close)

Would you like a tailored recommendation for building a personal AI stack based on your technical skill level or use cases (e.g., journaling, research, negotiation, shopping, data querying)?

Great — with your setup (modest technical skills, spare laptop, blazing-fast fiber), you’re in an excellent position to begin building a practical personal AI system without diving too deep into complex dev work. Here’s a recommended stack tailored to your current skills and goals:

🧰 YOUR PERSONAL AI STARTER STACK (2025)

🔧 1. Platform Base

Use a Linux distribution designed for ease of use and AI tinkering:

Recommended: Pop!_OS or Ubuntu 24.04

Easy GUI, good hardware compatibility, lots of online support

Optional: Use your laptop in “air-gapped” mode for privacy and full local control

🤖 2. Run a Local LLM with LM Studio

LM Studio (macOS/Windows/Linux) lets you run LLaMA 3, Mistral, Phi-3, and others without coding.

Built-in UI — chat with models locally, no internet required after download.

You can download 7B or 13B models based on your hardware.

Best for: private journaling, summarizing files, answering questions.✅ Why it’s ideal for you:

GUI-based

Just click and download models

No API keys or command-line needed once installed

📁 3. Chat with Your Files (PrivateGPT / LlamaIndex)

PrivateGPT or GPTPilot can let you ask questions across PDFs, emails, and notes stored on your machine.

Start simple: drop files into a folder, let the AI index them.

Optionally install via Docker (one command), or use their prebuilt apps.✅ Use case:

“Summarize this research PDF”

“What did I write about project X last month?”

“Find the email where someone mentioned a refund”

📚 4. Knowledge Base + Journaling with Obsidian

Obsidian = Markdown-based knowledge base.

Everything you write stays local.

You can point LM Studio to your notes to query your own memory.✅ Easy way to:

Keep private journals

Index your life

Ask your AI things like “What are my goals for June?”

🗂️ 5. Personal Data Management with Datasette

Datasette by Simon Willison lets you browse and query your own data with simple SQL and CSV files.

Feeds AI with structured personal info (purchases, inventory, plans, etc.)

You can install it with a single terminal command:

bash

pip install datasette datasette serve your-data.db

datasette serve your-data.db✅ Combine with LM Studio to get responses like:

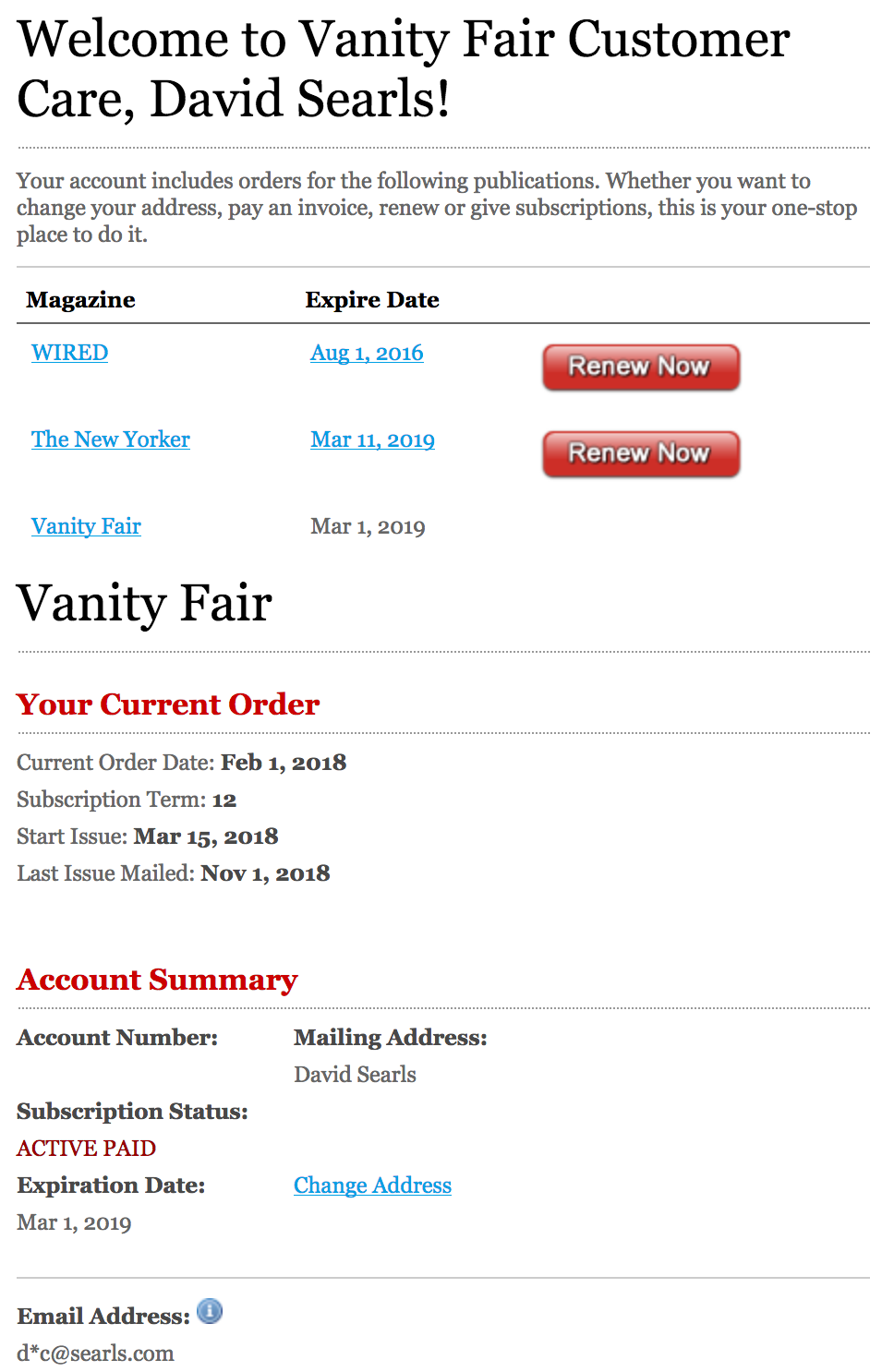

“What subscriptions do I need to cancel?”

“When did I last back up my photos?”

🌐 6. Optional: Browser Helper Agent (Local, Simple)

Use AutoBrowser or a simpler Puppeteer script to automate browsing tasks (like checking prices, news, account activity).

Start with prebuilt scripts. No coding needed if you copy/paste working examples.

🔒 7. Privacy Boosters

Run Tailscale or WireGuard to access your AI remotely if needed.

Use LocalAI or Ollama if you later want to run everything without GUI (more advanced).

🧭 Putting It All Together

Task

Tool

Chat privately with an AI

LM Studio

Summarize your own notes or PDFs

PrivateGPT or GPTPilot

Store and reflect on your life data

Obsidian, Datasette

Manage subscriptions and tasks

Datasette + Chat

Ask about your files or history

LlamaIndex or GPT over Obsidian notes

Use voice later (optional)

Whisper.cpp, OpenVoice (easy tools)

🪜 Next Steps (in plain order)

Install Pop!_OS or Ubuntu on your spare laptop

Install LM Studio and download a 7B model like Mistral or LLaMA 3

Start using it to answer personal questions and summarize documents

Add Obsidian to start journaling and reflecting

Try PrivateGPT or Datasette to search your past or track your life

Would you like a checklist or an install script to get started with this stack? I can also help you set up a file structure to begin tracking and querying your own data.

Thanks for the detailed breakdown — you’re describing the foundation of a true personal data architecture: structured, queryable, and under your control. Let’s build you a file structure and management strategy that matches your needs without overcomplicating the tech.

📁 Recommended Top-Level Folder Structure

This folder structure is designed to be future-proof, machine-readable, and human-usable. Use plain folders, CSV/JSON/Markdown files, and optional SQLite databases to store structured data. You can later layer tools (like LLMs or Datasette) on top.

🧠 Strategy for Managing It All

1. Use Consistent File Types

| Data Type | File Format |

|---|---|

| Structured entries | .csv, .json, .sqlite |

| Notes or logs | .md (markdown) |

| Documents | .pdf, .docx, .txt |

| Images/attachments | store in attachments/ subfolder per topic |

2. Naming Conventions (Machine + Human Friendly)

Use ISO-like formats to make things sortable and parseable:

3. Data Capture Tools

You don’t need to build it all manually:

-

Use Obsidian for ongoing notes (e.g. travel journal, work logs)

-

Use Paperless-ngx (optional) to OCR and tag your scanned PDFs (health, finance)

-

Use GPS History Export from Google and Apple for travel/locations

-

Use email filters or Zapier to auto-download bills, statements, confirmations

4. Querying and Reviewing

Add one or more of these later:

-

Datasette → browse and search CSVs/SQLite locally

-

LM Studio + PrivateGPT → ask, “When did I last visit Dr. Chen?” or “What devices are in storage?”

-

LlamaIndex → index your file tree and let AI search/summarize it

✅ Summary of Benefits

| Goal | This Setup Delivers |

|---|---|

| Total local control | You decide access, storage, retention |

| Future AI-readiness | Structured enough for agents to use |

| Easy to back up | Plain files, portable databases |

| Modular growth | Start simple, layer in AI when ready |

Would you like me to generate a starter template with folder structure and some CSV/Markdown file formats filled in with mock data for each domain? That way you can load it directly into your system and start customizing.

Me again:

That’s it for now.

From the

From the